The XLike project is about data analytics, and there can be no data analytics without data. Therefore, one of the first tasks in the project was to acquire a large-scale dataset of news data from the internet.

We set about this by creating a continuous news aggregator. This piece of software provides a real-time aggregated stream of textual news items published by RSS-enabled news providers across the world. The pipeline performs the following main steps:

- Periodically crawls a list of news feeds and obtains links to news articles

- Downloads the articles, taking care not to overload any of the hosting servers

- Parses each article to obtain

- Potential new RSS sources, to be used in step (1)

- Cleartext version of the article body

- Processes articles with Enrycher

- Exposes two streams of news articles (cleartext and Enrycher-processed) to end users.

The data sources in step (1) include:

- roughly 75000 RSS feeds from 1900 sites, found on the internet (see step 3a)

- a subset of Google News collected with a specialized periodic crawler

- private feeds provided by XLike project partners (Bloomberg, STA)

Check out the real-time demo at http://newsfeed.ijs.si/visual_demo/ (which does not show the contents of private feeds). The speed is bursty but averages at roughly one article per second.

Cleartext extraction

News articles obtained from the internet need to be cleaned of extraneous markup and content (navigation, headers, footers, ads, …).

We use a completely heuristics-based approach based on the DOM tree. With the fast libxml package, parsing is not a limiting factor. The core of the heuristic is to take the first large enough DOM element that contains enough promising <p> elements. Failing that, take the first <td> or <div> element which contains enough promising text. The heuristics for the definition of “promising” rely on relatively standard metrics found in related work as well; most importantly, the amount of markup within a node. Importantly, none of the heuristics are site-specific.

We achieve precision and recall of about 94% which is comparable to state of the art.

Data enrichment

One of the goals of XLike is to provide advanced enrichment services on top of the cleartext articles. Some tools for English and Slovene are already in place: For those languages, we use Enrycher (http://enrycher.ijs.si/) to annotate each article with named entities appearing in the text (resolved to Wikipedia when possible), discern its sentiment and categorize the document into the general-purpose DMOZ category hierarchy.

We also annotate articles with a language; detection is provided by a combination of Google’s open-source Compact Language Detector library for mainstream languages and a separate Bayesian classifier. The latter is trained on character trigram frequency distributions in a large public corpus of over a hundred languages. We use CLD first; for the rare cases where the article’s language is not supported by CLD, we fall back to the Bayesian classifier. The error introduced by automatic detection is below 1% (McCandless, 2011).

Language distribution

We cover 37 languages at an average daily volume of 100 articles or more. English is the most frequent with an estimated 54% of articles. German, Spanish and French are represented by 3 to 10 percent of the articles. Other languages comprising at least 1% of the corpus are Chinese, Slovenian, Portugese, Korean, Italian and Arabic.

System architecture

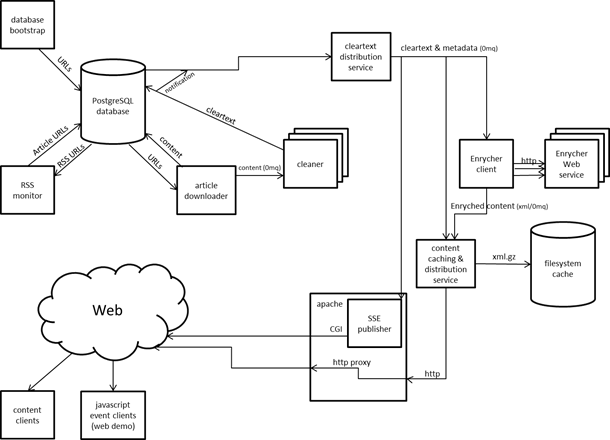

The aggregator consists of several components depicted in the flowchart below. The early stages of the pipeline (article downloader, RSS downloader, cleartext extractor) communicate via a central database; the later stages (cleartext extractor, enrichment services, content distribution services) form a true unidirectional pipeline and communicate thorugh ZeroMQ sockets.

Responsiveness

We poll the RSS feeds at varying time intervals from 5 minutes to 12 hours depending on the feed’s past activity. Google News is crawled every two hours. All crawling is currently performed from a single machine; precautions are taken not to overload any news source with overly frequent requests.

Based on articles with known time of publication, we estimate 70% of articles are fully processed by our pipeline within 3 hours of being published, and 90% are processed within 12 hours.

Data dissemination

Upon completing the preprocessing pipeline, contiguous groups of articles are batched and each batch is stored as a gzipped file on a separate distribution server. Files get created when the corresponding batch is large enough (to avoid huge files) or contains old enough articles. End users poll the distribution server for changes using HTTP. This introduces some additional latency, but is very robust, scalable, simple to maintain and universally accessible.

The stream is freely available for research purposes. Please visit http://newsfeed.ijs.si/ for technicalities about obtaining an account and using the stream (data formats, APIs).